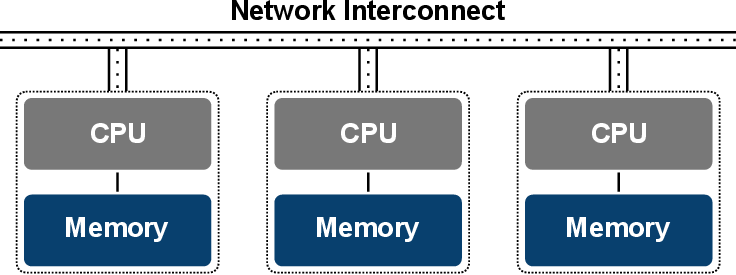

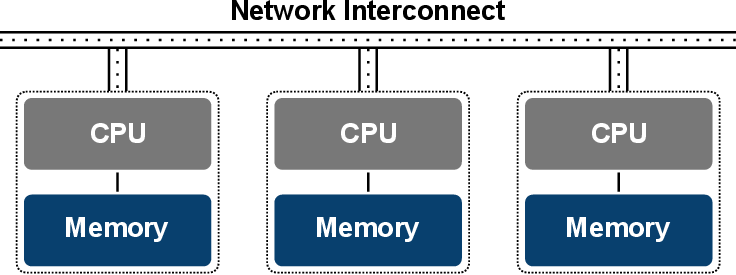

A distributed-memory model connects different processors via a communication network (Figure 5.4). This model originates from a time where a CPU contained a single processing core. Such a setup is typically not found anymore in today’s cluster systems due to the advent of multi-core CPUs. However, the model still serves well as an introduction to distributed computing, as the concepts are still applicable.

The defacto standard following the distributed-programming model is the MPI [135]. However, other less popular distributed parallel programming models are available, such as High Performance Fortran [136][137] and partitioned global address space [138] models like Co-Array Fortran [139] and Unified Parallel C [140]. MPI is implemented by various commercial and open source institutions, which make their individual implementation available as a library to be linked to the respective target application. It is important to note that using MPI is not restricted to distributed-memory systems, MPI can also be used on shared-memory systems (Section 5.1.1).

The fundamental difference between the shared and the distributed-memory programming model is the fact that in the distributed case processes are used instead of threads. As processes have only access to their own memory address space, accessing non-local data requires inter-process communication. The inter-process communication is implemented in MPI by so-called messages, hence the name message passing interface.

The overall performance of such a distributed system is not only determined by the compute capabilities of the individual processing units, but also by the network interconnect technology’s speed, layout, and switching capabilities. Collecting, for instance, the partial result data from all processes imposes a considerable challenge for the network interconnect, as concurrent transmissions easily saturate the supported throughput. If communication or computation becomes predominant with respect to run-time performance, the parallel application is said to be communication bound or computation bound, respectively.