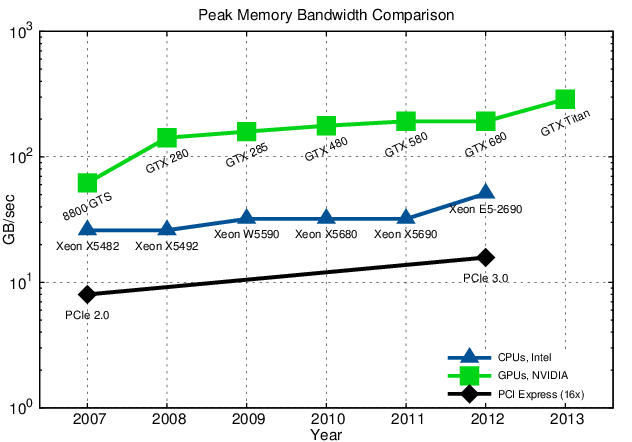

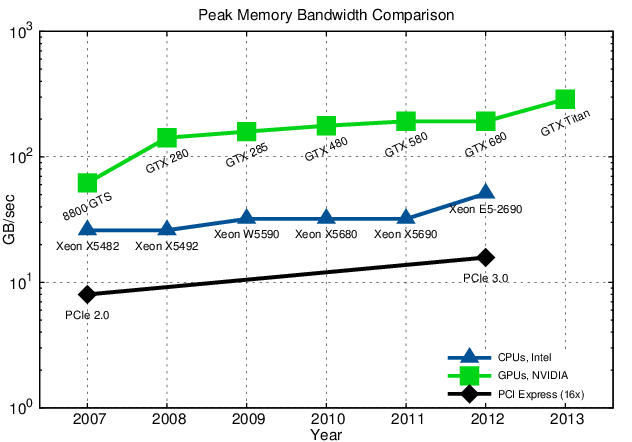

At the time of writing, it is common practice to augment nodes of supercomputers with accelerators, such as graphics adaptors and so-called coprocessors. For instance, Titan utilizes NVIDIA Tesla K20 graphics processing units ( GPUs) [141], where Stampede instead uses Intel Xeon Phi SE10P coprocessors [145]. In general, accelerators offer high core numbers and an excellent peak performance/watt ratio when compared to common shared-memory clusters [146]. However, one of the fundamental limitations is the connection with the host system via a connecting bus. The bandwidth and latency of such a bus system, e.g., PCI Express, are inferior compared to, for instance, the host’s memory bus, resulting in a significant communication bottleneck. Figure 5.6 compares the memory bandwidths of typical CPUs with accelerators and the PCI Express bus [147]. To overcome this problem, processor vendors aim to integrate the accelerators on the CPU die, thus eliminating the need for a slow bus system [148]. However, if the applications can be tuned to minimize such communication in the first place, accelerators are capable of providing outstanding performance with simultaneously reduced energy consumption, heat dissipation, and costs.

From a software point of view, different approaches to program accelerators are available, which are typically also specific to the utilized accelerator. Prominent examples are CUDA and OpenCL. Where the first solely supports NVIDIA-based boards, the latter is not restricted to a specific board vendor, nor is it restricted to GPUs, as it can be utilized with CPUs as well. The computational capabilities of Intel accelerators - so-called coprocessors - can be harnessed with, for instance, Intel Cilk Plus, offering an offloading feature to perform computations on the coprocessor. Recently the OpenACC standard has been released, aiming for an increased level of convenience for programming highly-parallel compute targets. OpenACC focuses on a directive-based programming approach, similar to OpenMP.

The broad availability of programming models and different processor architectures of accelerators shows that this field is currently in flux. It is not clear at the moment which hardware and software approach will prevail. This, however, has considerable ramifications for software developers with respect to long-term support. Relying on a specific accelerator technology for boosting an application introduces a significant external dependence. Programming accelerators is very challenging and thereby allocates considerable resources. However, due to the rather unstable accelerator environment, it is not foreseeable whether a chosen accelerator platform will be supported in the future, thus bearing the risk of wasted development.