Description

Description

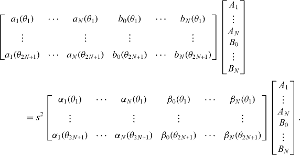

GPUs are able to provide high performance for the operation C = A * B for dense matrices A, B, and C. However, if A and B are both sparse matrices, a lot of additional tricks are required to get reasonable performance. The aim of this project is to implement a toolkit of algorithms analyzing the sparsity patterns, which are then composed to yield a fast sparse matrix-matrix multiplication.

Moreover, the implementations should be tuned to GPUs from NVIDIA and AMD as well as Intel's MIC platform.

Benefit for the Student

The student will get hands-on experience in GPU programming using both OpenCL and CUDA. In particular, the student will learn the various tricks required to obtain high performance.

Benefit for the Project

The sparse matrix-matrix multiplication is a key building block for algebraic multigrid solvers and preconditioners. A fast sparse matrix-matrix multiplication will directly improve the efficiency of such methods significantly.

Requirements

Experience in either OpenCL or CUDA is desired.

Mentors

Contact

Mentors are regularly around in our GSoC IRC channel #TU-CSE-SoC at irc.freenode.net. You can also reach us via the mailinglist – send an email to This email address is being protected from spambots. You need JavaScript enabled to view it. using the prefix [VIENNACL] (a subscription is required).

More information

http://www.iue.tuwien.ac.at/cse/wiki/doku.php?id=sparse_matrix-matrix_multiplication