Next: 2.2 Heating Phenomena Up: 2. Thermal Effects in Previous: 2. Thermal Effects in Contents

To fully describe a complex system on a microstate level, an enormous number of

different microstates has to be known, and their interactions have to be

determined in order to obtain the future behavior from the past states.

Because is not possible to store the bulk of data that would be necessary to

calculate all the effects correctly, a statistics-based description has to be

used [70].

A possible way to obtain a representative quantity is to count the number of

occupied or unoccupied microstates.

Historically, the maximum number of possible states which can be

theoretical occupied was chosen to determine the disorder of a system.

This maximum value of disorder correlates with the energy of the system.

Because the number of microstates is always a positive integer and is normally

enormously large, the corresponding information content of a given system

![]() can be logarithmically counted according to information theory.

This introduced logarithmic quantity is the so-called entropy

can be logarithmically counted according to information theory.

This introduced logarithmic quantity is the so-called entropy

![]() which represents the level of maximum disorder.

which represents the level of maximum disorder.

Hence, the historical definition of the entropy of a given system is a measure

for the number of all possible quantum states which can be achieved following a

uniform probability distribution. If the number of reachable microstates for a

system

![]() is determined by

is determined by

![]() , the

corresponding entropy

, the

corresponding entropy ![]() of this system is defined as its natural

logarithm

of this system is defined as its natural

logarithm

| (2.19) |

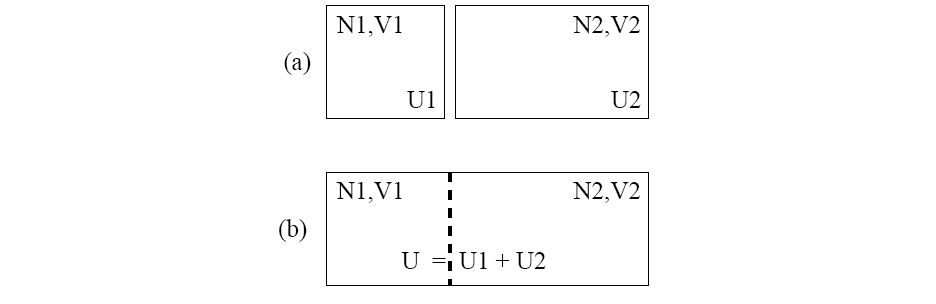

If two systems are considered as spatially and thermally insulated systems

![]() and

and

![]() , where each of them has a certain

internal energy

, where each of them has a certain

internal energy ![]() and

and ![]() as shown

in Figure 2.1a. and if they are brought into thermal contact

(cf. Figure 2.1b), the number of particles and the volumes remain

constant, but the individual energies

as shown

in Figure 2.1a. and if they are brought into thermal contact

(cf. Figure 2.1b), the number of particles and the volumes remain

constant, but the individual energies ![]() and

and ![]() are no

longer spatially confined [69].

Therefore, an energy transmission can be observed. In this case, the total energy

are no

longer spatially confined [69].

Therefore, an energy transmission can be observed. In this case, the total energy

![]() remains constant if no other energy fluxes

are observed.

So the energy flows in the most probable case from one side to the other under

the constraint that the product of the single entropies

remains constant if no other energy fluxes

are observed.

So the energy flows in the most probable case from one side to the other under

the constraint that the product of the single entropies

![]() maximizes.

That is again a measure for the total number of states of the global system and

therefore, also the sum

maximizes.

That is again a measure for the total number of states of the global system and

therefore, also the sum

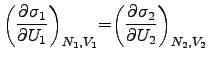

| (2.20) |

|

|

(2.21) |

|

(2.25) |

An interesting corollary to definition (2.22) is the fact that the value zero for the fundamental temperature cannot be reached under the constraint of finite energy resources because the energy gradient would become infinity which has been proven to be impossible [71].