|

|

||||

BiographyJohannes Ender was born in 1988 in Hohenems, Austria. In 2013 he finished his Master’s studies at the University of Applied Sciences in Vorarlberg. After working in industry for three years he pursued the Master’s studies of Computational Science at the University of Vienna. In November 2018 he joined the Institute for Microelectronics where he started his PhD studies researching the simulation of non-volatile magnetic memory devices. |

|||||

Improving Spin-Orbit Torque Memory Cell Switching With the Help of Reinforcement Learning

Spin-orbit torque magnetoresistive random access memory (SOT-MRAM) is non-volatile, fast and exhibits large endurance. These properties make SOT-MRAM suitable for replacing existing charge-based RAM in registers or high-level caches. An external magnetic field is needed though for structures with perpendicular magnetization to be switched deterministically.

A recently proposed SOT-MRAM cell architecture enables field-free, purely electrical switching by applying current pulses to two orthogonal heavy metal wires attached to the top and bottom of the ferromagnetic free layer of the memory cell. Current flowing through these metal wires creates spin-orbit torque which acts on the magnetization. The choice of current amplitude, as well as the temporal delay or overlap of the current pulses, opens up a huge space of possibilities to optimize switching, and finding efficient pulse sequences remains a challenge.

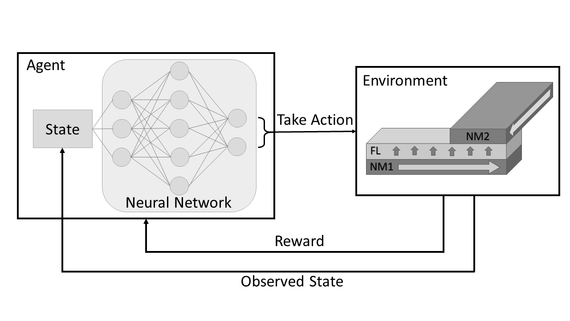

Reinforcement learning (RL), a subbranch of machine learning, has been shown to be suitable for such complex problems. By repeated interaction with an environment, an agent gathers experience in the form of a reward signal corresponding to a certain optimization objective (cf. Fig. 1). The agent tries to maximize its cumulative reward by continuously refining its policy of action, which is internally represented as a neural network.

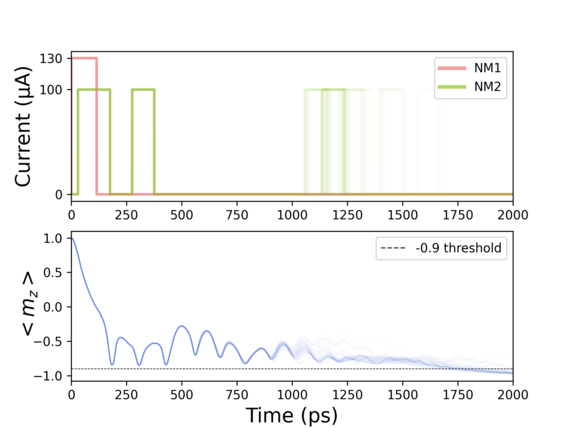

We created an RL environment incorporating a finite difference simulation of the field-free SOT-MRAM cell mentioned above. The agent that interacts with the memory cell environment is allowed to switch the current coming in through the two metal wires on and off individually with a minimum on/off time of 100 picoseconds. The reward scheme is chosen in such a way that fast magnetization reversal is encouraged. By performing many switching simulations, the agent learns how best to apply the pulses in order to achieve fast switching. Fig. 2 shows the current pulses applied by the neural network model after a learning period and the corresponding trajectory of the z-component of the magnetization over 50 realizations. A random thermal field leads to slight variations between the runs, but as can be seen, the magnetization is reversed deterministically. What can also be observed is that the NM1 pulse is always applied only once, while the current on the NM2 wire is turned on several times in certain simulation runs.

Fig. 1: General setup of the reinforcement learning simulation: A simulation of the SOT-MRAM cell acts as an environment which an agent interacts with to build up a policy based on a neural network.

Fig. 2: Results of 50 realizations for fixed material parameters using the learned neural network model. Results of the single runs are plotted slightly transparent, so that regions where multiple lines overlap appear more solid.