B. Multivariate Bernstein Polynomials

Here we give the proofs of the theorems in

Section 7.4.

Theorem B..1

Let

![$ f: I:=[0,1]\times[0,1]\to\mathbb{R}$](img103.png)

be a continuous function. Then

the two-dimensional Bernstein polynomials

converge pointwise to

for

.

Proof.

Let

be a fixed point. Because of

Theorem

7.2 we have

for all

and

. The

second summand is smaller than

for

because

is the Bernstein polynomial for

, and the first summand is

smaller than

for

because

is the (one-dimensional) Bernstein polynomial

for

. Q.E.D.

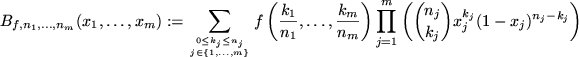

Definition B..2 (Multivariate Bernstein Polynomials)

Let

and

be a function of

variables.

The polynomials

are called the multivariate Bernstein polynomials of

.

Theorem B..3 (Pointwise Convergence)

Let

![$ f: [0,1]^m\to\mathbb{R}$](img110.png)

be a continuous function. Then the

multivariate Bernstein polynomials

converge

pointwise to

for

.

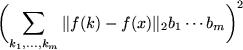

Proof.

By applying Theorem

7.2 to each summand in

we see that given an

there are

,...,

such that

for all

. Q.E.D.

Theorem B..5 (Uniform Convergence)

Let

![$ f: [0,1]^m\to\mathbb{R}$](img110.png)

be a continuous function. Then the

multivariate Bernstein polynomials

converge

uniformly to

for

.

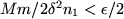

Proof.

We first note that because of the uniform continuity of

on

![$ I:=[0,1]^m$](img791.png)

we have

Given an

, we can find such a

. In order to

simplify notation we set

and

.

always lies in

. We have to estimate

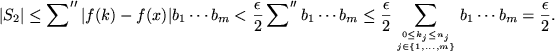

and to that end we split the sum into two parts, namely

where

means summation over all

with

(where

) and

, and

where

means summation over the remaining terms.

For

we have

We will now estimate

. In the sum

the inequality

holds, i.e.,

Hence at least one of the summands on the left hand side is greater

equal

. Without loss of generality we can assume this

is the case for the first summand:

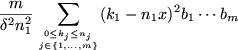

Thus, using Lemma

B.4,

We can now estimate

. Since

is continuous on a compact set

exists.

For

large enough we have

and thus

which completes the proof. Q.E.D.

A reformulation of this fact is the following corollary.

Corollary B..6

The set of all polynomials is dense in

![$ C([0,1]^m)$](img117.png)

.

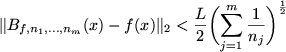

Theorem B..7 (Error Bound for Lipschitz Condition)

If

![$ f: I:=[0,1]^m\to\mathbb{R}$](img118.png)

is a continuous function satisfying the

Lipschitz condition

on

, then the inequality

holds.

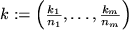

Proof.

Abbreviating notation we set

. We will use the Lipschitz condition,

Corollary

A.7, and

Lemma

B.4.

This completes the proof. Q.E.D.

Theorem B..8 (Asymptotic Formula)

Let

![$ f: I:=[0,1]^m\to\mathbb{R}$](img118.png)

be a

function and

, then

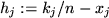

Proof.

We define the vector

through

, where the

are the integers over which we sum in

. Using

Theorem

A.14 we see

where

. Summing this equation like the sum

in

we obtain

since many terms vanish or can be summed because of

Lemma

B.4. Noting

we can

apply the same technique as in the proof of

Theorem

B.5 for estimating the last sum

in the last equation, i.e., splitting the sum into two parts for

and

. Hence we see that for all

this sum is less equal

for all sufficiently

large

, which yields the claim. Q.E.D.

Clemens Heitzinger

2003-05-08