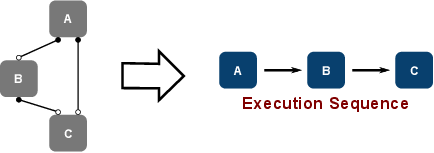

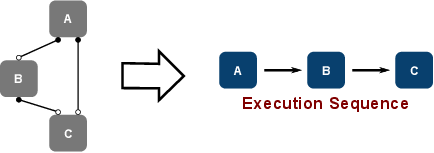

Ultimately the components must be executed. The sequence of execution, however, depends on the input and output data communication requirements of each utilized component. Scheduler mechanisms are required to determine the execution order in such a way that upon a component’s execution all the required input data is available, i.e., the input data has been generated by other components (Figure 5.10).

Essential for usability is an automatic dependence resolution process. The end user should merely list the components to be utilized by the framework, but should not be burdened with the responsibility of establishing the connections manually. The framework must automatically - based on the data communication layer and the thus provided dependencies of each component - resolve the dependencies and compute an appropriate execution sequence.

Also the scheduler should support different serial and parallel execution methods. Most important is data parallelism based on the MPI, where each component works on a subset of the data. This particular parallelization mode is especially important to the field of CSE, due to the typically large simulation domains where a specific physical model is evaluated. Methods like domain decomposition are used to partition the data set into data chunks of comparable computational effort which are then processed in an MPI-based computing environment [100], such as hybrid computing clusters. Aside from data parallelism also task parallelism merits special consideration. In this particular execution the components are executed in parallel, meaning that, for instance, two components might be executed by two different processes. Among the application areas are wave front simulations [149]. However, a serial mode is also essential, as not all applications inherently support or favor a distributed memory model.

With respect to task parallelism, a mechanism has to be provided allowing to guide the automatic task scheduling. For instance, an advanced user and a developer has knowledge about the computational effort of a task and thus by extension about the estimated execution times. If the scheduler has knowledge about the expected computational load of each task, the scheduling can be adapted, for example, the task with the long run-time is processed on a separate process than the rest of the tasks, offering short run-times and ultimately improving parallel execution efficiency.

The challenges of scheduler implementations are primarily related to automatically determining a correct execution sequence especially in a parallel setting. In particular, the usability should be maximized whereas the code base should be minimized to favor maintainability. Also the task parallel scheduling has to be augmented with an optional task-specific weight factor, reflecting the individual execution run-time. In this regard, the challenge is to incorporate the advanced user or developer-provided task weights into the scheduling, either by hard-coding it into the component implementations or by using the non-intrusive configuration mechanism, which is discussed in the subsequent section.