|

|

|

|

Previous: 3.7.1 Garbage Collection Up: 3.7 Implementation Details Next: 4. Process Simulation Applications |

For each memory region that is allocated on the free store, the operating system needs to keep track of, at least, the size and the address3.8. Therefore, the memory and performance overhead particularly for small sized objects like integer variables, is very high. By delegating parts of the operating system's memory management to the application, some of this overhead can be eliminated. A technique to manage free store memory is a so-called memory pool.

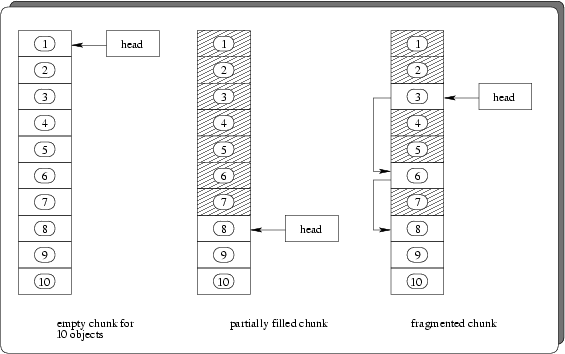

In a memory pool the allocated memory is increased and decreased in chunks, each capable of storing several objects. The C++ runtime system passes address and size of an object to the new and delete operators of a class, thus the explicit storage of this information can be omitted3.9. In order to handle fragmentations that occur upon freeing objects with non consecutive memory addresses, a single linked list structure as depicted in Fig. 3.21 is used.

|

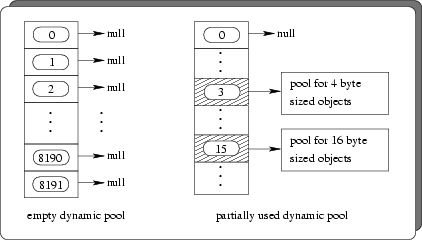

Unfortunately, the above described simple pool can not directly

be applied for a general implementation of the allocation and deallocation

operators. This is due to the fact that it is incapable of being safely used in

class hierarchies, since the size of the objects managed by this pool is

determined at compile time (hard-coded). The (dynamically determined) size

arguments to operators new and delete are completely ignored. As a

consequence, inheritance of the operators to derived classes that require more

memory for an object instance would be fatal and result in memory

corruption. Moreover, such a misuse could not even be detected or avoided at

either compile- or runtime. Therefore, the simple pool was extended to handle

objects of arbitrary sizes. This dynamic sized pool internally uses several

statically (sized) pools, each for a given object size. The static pools are

allocated on demand as soon as memory for the first object of a given size is

requested. The size argument supplied to the

new operator is thereby used as an index into an array of

pointers to static pools. The array size determines the biggest object that

can be managed in the pool. For the WAFER-STATE-SERVER the size was set to a value of

![]() which allows to manage

which allows to manage ![]() kB sized objects

(c. f. Fig. 3.22).

kB sized objects

(c. f. Fig. 3.22).

|

2003-03-27