|

|

|

|

Previous: Genetic Optimizer Up: 5.6.1 Comparison of Local and Global Optimization Strategies Next: 5.6.2 Combination of Local and Global Optimization Strategies |

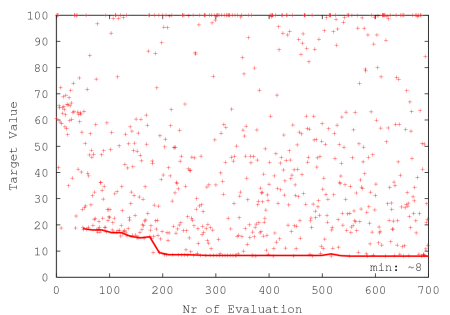

Fig. 5.19 shows the progress of the very fast simulated re-annealing algorithm. Compared to the genetic algorithm this optimizer reaches the same target value within approximately one third of evaluations. The results were achieved with standard parameter settings.

|

It can be stated that among the evaluated global optimization

strategies, simulated annealing seems to be best suited for the case of the

inverse modeling application. We furthermore observed that for a

larger number of evaluations (several thousands) very fast simulated re-annealing delivered nearly

optimal target values, whereas the optima achieved with the genetic algorithm

did not drop below a certain value. This calls for further experimenting with

![]() and

and ![]() and other parameters during the evolution. However,

the optimal settings for these parameters are difficult to extract. We found

that the very fast simulated re-annealing algorithm is faster than the genetic algorithm by at least a

factor of three. This conforms to the experiments done by L. Ingber

[108] who reports a speed difference of about one magnitude.

and other parameters during the evolution. However,

the optimal settings for these parameters are difficult to extract. We found

that the very fast simulated re-annealing algorithm is faster than the genetic algorithm by at least a

factor of three. This conforms to the experiments done by L. Ingber

[108] who reports a speed difference of about one magnitude.

The local gradient based method is the fastest if the initial guess is chosen appropriately but stops in a local minimum or even fails to converge otherwise. In this case the whole optimization must be restarted with a different initial guess. Compared to a local optimizer the presented global optimization techniques demonstrate robust optimization strategies which are essential in cases where an appropriate initial guess is not available.

2003-03-27