|

|

|

|

Previous: 5.6.2.2 Optimizer Interaction Up: 5.6.2 Combination of Local and Global Optimization Strategies Next: 5.6.3 Calibration of a Model for Silicon Self-Interstitial |

In the following several experiments carried out with the combination of very fast simulated re-annealing and a local optimization strategy are presented. The optimizations were performed with different numbers of initial evaluations and evaluations between two sub-optimization runs.

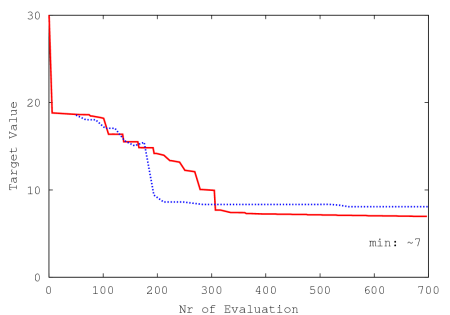

Fig. 5.24 depicts the case of a combined optimization

|

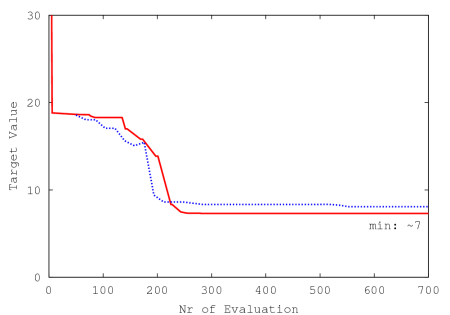

Fig. 5.25 depicts a strategy that reaches the best target

of the reference run even faster. In this experiment the optimizers are used

alternately. The best target of one optimizer is thereby taken as initial

guess for the other optimizer respectively. An initial number of ![]() evaluations were performed before the sub-optimization task was initiated the

first time. As soon as an improve in the target value was detected the

sub-optimization task was terminated and the master continued with its best

state updated to the result of the gradient-based optimizer. The interval

between two sub-tasks was set to

evaluations were performed before the sub-optimization task was initiated the

first time. As soon as an improve in the target value was detected the

sub-optimization task was terminated and the master continued with its best

state updated to the result of the gradient-based optimizer. The interval

between two sub-tasks was set to ![]() .

.

|

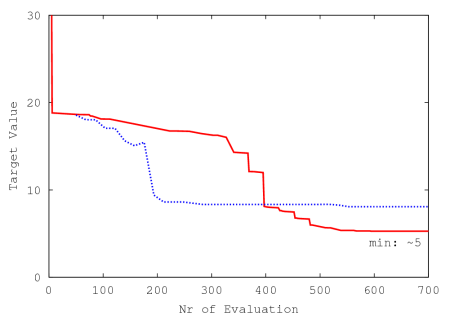

The strategy depicted in Fig. 5.26 results in the best

target value for the given number of maximum evaluations (![]() ) for all

experiments that were carried during this comparison of optimization

strategies. Here

) for all

experiments that were carried during this comparison of optimization

strategies. Here ![]() initial evaluations were performed and

initial evaluations were performed and ![]() evaluations

were performed between two consecutive sub-optimization runs.

evaluations

were performed between two consecutive sub-optimization runs.

|

2003-03-27